Asia-Pacific (APAC) policymakers are under pressure to strike a balance between artificial intelligence (AI) innovation and protections for people, markets, and security.

Currently, there are varying emerging AI standards across APAC countries which creates cross jurisdiction compliance challenges for financial services firms.

Governments and regulators in Singapore, Japan, South Korea, Australia, China, India, Hong Kong, Thailand, Indonesia, and the Philippines have been proposing regulations, testing frameworks, and publishing codes of ethics since the EU AI Act went into effect in August 2024.

At the forefront, is South Korea with the first comprehensive AI regulation in APAC. China is moving forward with its legally binding content regulation while Singapore and Japan are setting standards with governance toolkits and assurance frameworks.

Australia and New Zealand, on the other hand, are adopting rules and revising their sector legislation with emphasis on safety measures and light-touch governance.

This fragmented approach leads to regulatory gaps, complexities and unequal protection across markets.

Despite this, there is some visible alignment with EU-style requirements particularly in the areas of risk-based tiers, content provenance, and system testing.

The US is advising Asia to avoid Europe’s heavy-handed model and promising financing packages for countries that adopt American systems. As White House adviser Michael Kratsios warned: “You can follow the European model and be left behind… or choose freedom to build your own AI destiny.”

China, on the other hand is calling for collective governance. Chinese premier Li Qiang at the World AI conference (WAIC) in Shanghai said, “AI development must be weighed against security risks… how to find a balance between development and security urgently requires further consensus.”

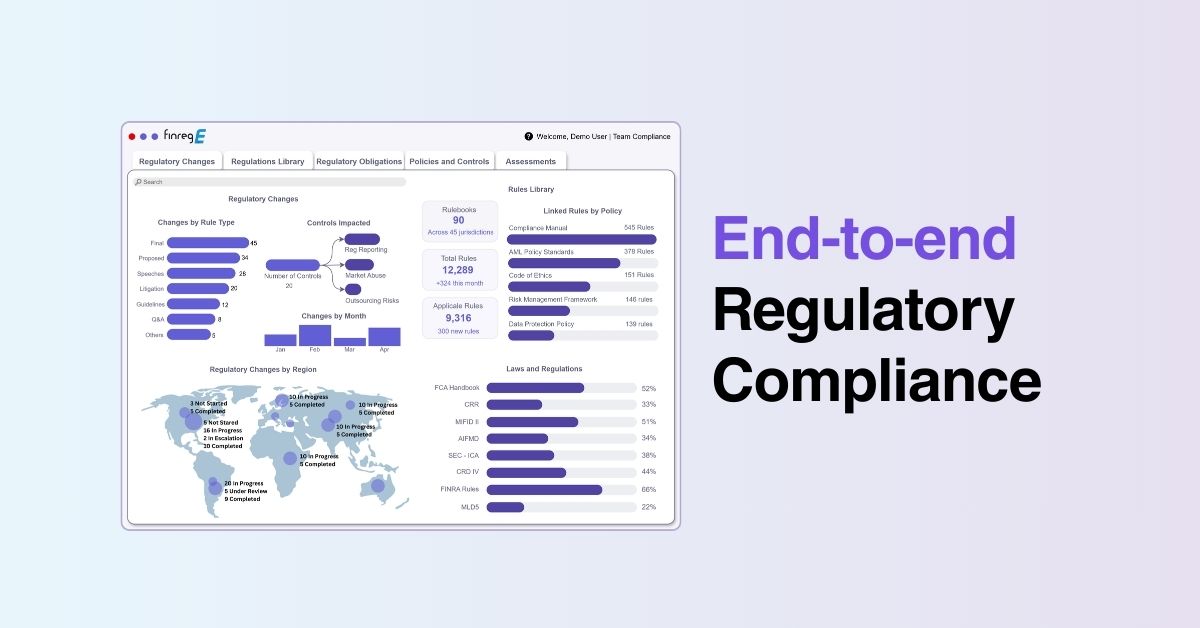

At FinregE, we help organisations see the whole picture. Our platform maps new rules across different jurisdictions and gives compliance teams the tools to quickly adapt without building ten separate programmes.

Rules across APAC

- South Korea has its own AI Basic Act which is due to come effect in 2026. It’s the closest to Europe in structure.

- Vietnam passed its Digital Technology Industry Law, also starting in 2026, with a risk-based framework.

- Japan and Taiwan are drafting laws while also standing up testing and assurance institutes.

- Singapore is betting on frameworks and toolkits, like AI Verify, that companies can use to test and show accountability.

- China already enforces rules such as filings for algorithms, labelling for synthetic content, and security checks for public models.

- Australia is preparing guardrails for high-risk uses in health, credit, and hiring.

- New Zealand is positioning itself as a “sophisticated adopter,” updating existing privacy and consumer rules rather than writing new AI laws.

- India, Indonesia, Hong Kong, and the Philippines are building sector-specific rules and privacy-led safeguards, with India and Hong Kong pressing on labelling and transparency.

This mix reflects not just different policy viewpoints but also different capacities. Singapore is ready to lead with governance tools. Laos and Myanmar, at the other end, are still catching up on basic digital readiness. A regional model that fits everyone is unlikely.

Southeast Asia does not want to take sides and is calling for neutrality. As Julian Gorman, GSMA’s Head of APAC, said at the East Tech West conference: “It’s very important that we continue to focus on not fragmenting the technology, standardising it, and working so that technology transcends geopolitics and ultimately is used for good.”

That means drawing lessons from the EU, the US, and China, while building own capabilities.

Where APAC stands in terms of the EU model

There are clear areas of convergence with Europe. Risk-based oversight is appearing in Korea, Vietnam, and Thailand’s draft regulations.

Testing and assurance are becoming part of procurement currency, with Singapore, Japan, and Hong Kong leading. Content authentication is becoming more popular, with China mandating labelling and India pushing for notices.

But there are gaps. The EU is the only one with clear obligations for general-purpose AI.

Most APAC rules focus on users and deployers, not model developers.

Enforcement is also uneven – China is strict today, while many others are still experimenting with voluntary codes.

ASEAN’s challenge: Principles without enforcement

Association of Southeast Asia (ASEAN) has tried to create some common ground with its Guide on AI Governance and Ethics. Updated in 2025 to include generative AI, it lays out seven broad principles: transparency, fairness, security, reliability, human-centricity, privacy, and accountability.

The problem is that the guide is only voluntary. It reflects ASEAN’s non-interference approach, which means no binding rules and no enforcement. That makes it more of a reference document than a real safeguard.

And this gap matters. Malaysia and Thailand are now major hubs for data centres, but the rules around how those centres are managed are still loose.

This under-regulation can be exploited by outside players to escape export restrictions. With Southeast Asia turning into a key part of the global AI supply chain, these gaps are not just technical risks, they can quickly become geopolitical vulnerabilities.

What this means for business leaders

- Compliance will get more complex. Different markets will require different evidence.

- EU-style documentation is likely to become the backbone, because it’s already filtering into supply chains.

- Shadow AI and unlabelled generative features are immediate red flags for regulators.

The smart move is to build one control stack that meets EU-style requirements, then adjust to local markets.

What to watch for in 2025–2026

- EU obligations on general-purpose AI started in 2025 and most high-risk duties are phased to come out in 2026–2027.

- South Korea and Vietnam’s new laws take effect in 2026.

- Australia is expected to firm up its guardrails by 2026.

- China is already stepping up filings and security assessments.

- Thailand and Indonesia will finalise draft frameworks into binding rules.

- New Zealand will keep updating existing laws in line with adoption.

How FinregE can help

The regulatory landscape around AI is fragmented. FinregE tracks all new and existing rules in one place.

At FinregE, we provide:

- Horizon scanning module that makes sure you never miss a consultation or a new law.

- Machine-readable digital rulebooks translate regulations into actionable requirements.

- Testing frameworks align with EU documentation, Singapore’s AI Verify, and sector-specific guidance.

- Dashboards give leadership a clear view of where the firm stand and what is coming next.

You don’t have to run ten different compliance programmes. You can run one, with local adjustments where needed. This saves time, reduces duplication, and keeps regulators and customers confident that your organisation is ahead of the curve.

Book a demo today.